ABSTRACT

An object detection system for autonomous vehicles, comprising a radar unit and at least one ultra-low phase noise frequency synthesizer, is provided. The radar unit is configured for detecting the presence and characteristics of one or more objects in various directions.

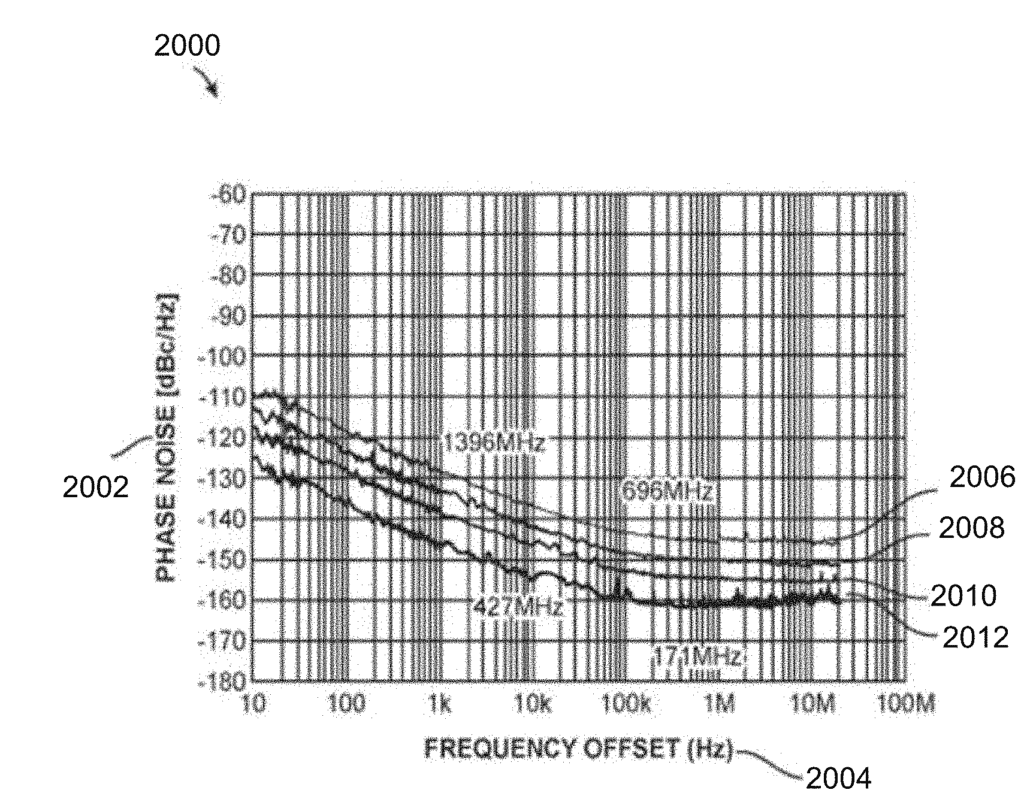

The radar unit may include a transmitter for transmitting at least one radio signal, and a receiver for receiving at least one radio signal returned from one or more objects. The ultra-low phase noise frequency synthesizer may utilize a Clocking device, Sampling Reference PLL, at least one fixed frequency divider, DDS, and main PLL to reduce phase noise from the returned radio signal. This proposed system overcomes deficiencies of the current generation state-of-the-art Radar Systems by providing a much lower level of phase noise which would result in improved performance of the radar system in terms of target detection, characterization, etc. Further, a method for autonomous vehicles is also disclosed.

Autonomous vehicles with ultra-low phase noise frequency synthesizer

An Inventor: Dr. Tal Lavian

Embodiments of the present disclosure are generally related to sensors for autonomous vehicles (for example, Self-Driving Cars) and in particular to systems that provide ultra-low phase noise frequency generation for Radar Applications for autonomous vehicles.

BACKGROUND

Autonomous Cars: Levels of Autonomous Cars:

-

According to the Society of Automotive Engineers (SAE) cars and vehicles, in general, are classified into 5 different classifications:

- Level 0: Automated system has no vehicle control, but may issue warnings.

- Level 1: The driver must be ready to take control at any time. The automated system may include features such as Adaptive Cruise Control (ACC), Parking Assistance with automated steering, and Lane Keeping Assistance (LKA) Type II in any combination.

- Level 2: The driver is obliged to detect objects and events and respond if the automated system fails to respond properly. The automated system executes accelerating, braking, and steering. The automated system can deactivate immediately upon takeover by the driver.

- Level 3: Within known, limited environments (such as freeways), the driver can safely turn their attention away from driving tasks, but must still be prepared to take control when needed.

- Level 4: The automated system can control the vehicle in all but a few environments such as severe weather. The driver must enable the automated system only when it is safe to do so. When enabled, driver attention is not required.

- Level 5: Other than setting the destination and starting the system, no human intervention is required. The automatic system can drive to any location where it is legal to drive and make its own decisions.

Sensor Technologies: Simple cars and other types of vehicles are operated by humans and these humans rely on their senses such as sight and sound to understand their environment and use their cognitive capabilities to make decisions according to these inputs. For autonomous cars and other autonomous vehicles, the human senses must be replaced with electronic sensors and the cognitive capabilities by electronic computing power. The most common sensor technologies are as follows: LIDAR (Light Detection and Ranging)—is a technology that measures distance by illuminating its surroundings with laser light and receiving the reflections. However, the maximum power of the laser light needs to be kept limited to make them safe for the eyes, as the laser light can easily be absorbed by the eyes. Such LIDAR systems are usually quite large, expensive, and do not blend in well with the overall design of a car/vehicle. The weight of such systems can be as high as tens of kilograms and the cost can be expensive and in some cases high up to $100,000.Radar (Radio Detection and Ranging)—These days Radar systems can be found as a single chip solution that is lightweight and cost-effective. These systems work very well regardless of lighting or weather conditions and have satisfying accuracy in determining the speed of objects around the vehicle. Having said the above, mainly because of phase uncertainties the resolution of Radar systems is usually not sufficient. Ultrasonic Sensors—These sensors use sound waves and measure their reflections from objects surrounding the vehicle. These sensors are very accurate and work in every type of lighting condition. Ultrasonic sensors are also small and cheap and work well in almost any kind of weather, but that is because of their very short range of a few meters. Passive Visual Sensing—This type of sensing uses cameras and image recognition algorithms. This sensor technology has one advantage that none of the previous sensor technologies have color and contrast recognition. As with any camera-based system, the performance of these systems degrades with bad lighting or adverse weather conditions. The table below is designed to provide a better understanding of the advantages and disadvantages of the different current sensor technologies and their overall contribution to an autonomous vehicle: The following tables scores the different sensors on a scale of 1 to 3, where 3 is the best score:

| Item | LIDAR | RADAR | Ultrasonic | Camera |

| Proximity Detection | 1 | 2 | 3 | 1 |

| Range | 2 | 2 | 1 | 3 |

| Resolution | 2 | 1 | 1 | 3 |

| Operation in darkness | 3 | 3 | 3 | 1 |

| Operation in light | 3 | 3 | 3 | 2 |

| Operation in adverse Weather | 2 | 3 | 3 | 1 |

| Identifies color or contrast | 1 | 1 | 1 | 3 |

| Speed measurement | 2 | 3 | 1 | 1 |

| Size | 1 | 3 | 3 | 3 |

| Cost | 1 | 3 | 3 | 3 |

| Total | 18 | 24 | 22 | 21 |

- The above presentation of the state of the technology proved a high-level view of the advantages and disadvantages of the technologies from different perspectives.

As shown in the table above, the available sensors for existing autonomous vehicles are LIDAR, Sonar, passive vision (cameras), and radar. Many of these sensors come with significant drawbacks, while radar systems do not experience most of the drawbacks and thus better among other sensors, based on the table shown above: For example, LIDAR systems have a “dead zone” in their immediate surroundings (as shown in FIG. 30A), while a Radar system will be able to cover the immediate surroundings of a vehicle as well as long-range with enhanced accuracy.In order to eliminate the “dead zone” as much as possible LIDARs are mounted tall above the vehicle (as shown in FIG. 30B). These limits the options of using parking garages cause difficulty in the use of rooftop accessories and finally also make the vehicle less marketable since such a tower does not blend in well with the design of a vehicle.Typical LIDAR systems generate enormous amounts of data which require expensive and complicated computation capabilities, while Radar systems generate only a fraction of this data and reduce the cost and complication of onboard computation systems significantly. For example, some types of LIDAR systems generate amounts of 1-Gb/s data that require a substantial amount of computation by strong computers to process such a high amount of data. In some cases, these massive computations require additional computation and correlation of information from other sensors and sources of information. In some cases, the source for additional computations is based on detailed road information collected over time in databases or in enhanced maps. Computations and correlations can be performed against past information and data collected over time.Typical LIDAR systems are sensitive to adverse weather such as rain, fog, and snow while Radar sensors are not. A radar system will stay reliable and accurate in adverse weather conditions (as shown in FIG. 31). LIDAR systems use mechanical rotation mechanisms that are prone to failure, Radars are solid-state and do not have moving parts and as such have a minimal rate of failures.Typical LIDAR systems rely on a rotation speed of around 5-15 Hz. This means that if a vehicle moves at a speed of 65 mph, the distance the vehicle travels between “looks” is about 10 ft. Radar sensor systems are able to continuously scan their surroundings especially when these systems use one transmitting and one receiving antenna (Bistatic system) (as depicted in FIG. 32). Further, LIDAR systems are not accurate in determining the speed and autonomous vehicles rely on Radar for accurate speed detection.Sonar: Sonar sensors are very accurate but can cover only the immediate surroundings of a vehicle, their range is limited to several meters only. The Radar system disclosed in this patent is capable of covering these immediate surroundings as well and with similar accuracy. Further, Sonar sensors cannot be hidden behind cars’ plastic parts which pose a design problem, Radars can easily be hidden behind these parts without being noticed.Passive Visual Sensing (Cameras): Passive visual sensing uses the available light to determine the surroundings of an autonomous vehicle. In poor lighting conditions, the performance of passive visual sensing systems degrades significantly and is many times dependent on the light that the vehicle itself provides and as such does not provide any benefit over the human eye. Radar systems, on the other hand, are completely agnostic to lighting conditions and perform the same regardless of light (as shown in FIG. 33).Passive visual sensing is very limited in adverse weather conditions such as heavy rain, fog, or snow; Radar systems are much more capable of handling these situations. Passive visual systems create great amounts of data as well which needs to be handled in real-time and thus require expensive and complicated computation capabilities, while Radar systems create much less data that is easier to handle. Passive visual systems cannot see “through” objects while Radar can, which is useful in determining if there are hazards behind vegetation for instance such as the wildlife that is about to cross the road.Further, it is easily understandable that in order to cover all possible scenarios all (or most) of these sensors need to work together as a well-tuned orchestra. But even if that is the case, under adverse lighting and weather condition some sensor types suffer from performance degradation while the Radar performance stays practically stable under all of these conditions. The practical conclusion is that Radar performance is not driven by environmental factors as much as by its own technology deficiencies, or a specific deficiency of one of its internal components that the invention here will solve.Summarizing all of the advantages and disadvantages mentioned above it is clear that Radar systems are efficient in terms of cost, weight, size, and computing power. Radar systems are also very reliable under adverse weather conditions and all possible lighting scenarios. Further, SAR Radar Systems may be implemented to create a detailed picture of the surroundings and even distinguish between different types of material.However, the drawback of existing Radar sensors was the impact on their accuracy due to the phase noise of its frequency source, the synthesizer. Thus, an enhanced system is required (for purposes such as for autonomous vehicles) that may utilize the benefits of the Radar system by mitigating/eliminating the corresponding existing drawbacks. For example, the required enhanced system, in addition to improving common existing Radar systems, should also improve bistatic or multistatic Radar designs that use the same platform or different platforms to transmit and receive for reducing the phase ambiguity that is created by the distance of the transmitting antenna from the receiving antenna by a significant amount.Essentially, a signal that is sent out to cover objects (here: Radar Signal) is not completely spectrally clean but sent out accompanied by phase noise in the shape of “skirt” in the frequency domain, and will meet a similar one in the receiver signal processing once it is received back. In a very basic target detection system, fast-moving objects will shift the frequency to far enough distance from the carrier so that the weak signal that is being received will be outside of this phase noise “skirt”. Slow-moving objects, however, such as cars, pedestrians, bicycles, animals, etc. might create a received signal that is closer to the carrier and weaker than the phase noise and this signal will be buried under this noise and practically will be non-detectable or non-recognizable.More advanced systems use modulated signals (such as FMCW) but the same challenge to identify slow-moving objects remains. The determination of two physically close objects vs. one larger object is also being challenged by phase noise.Another advanced Radar System worth mentioning is Synthetic Aperture Radar (or SAR) that is described in a different section of this disclosure.Many algorithms and methods have been developed to filter out inaccuracies of Radar-based imaging, detection, and other result processing. Some are more computational intensive while others are not. The common to all of them is that they are not able to filter out the inherent phase noise of the Radar system itself.This is crucial since a lot of the information a Radar system relies on, is in the phase of the returning signal. One simple example for this phenomenon is when a Radar signal hits a wall that is perpendicular to the ground or a wall that has an angle that is not 90 degrees relative to the surface, the phases of the return signals will be slightly different and this information could be “buried” under the phase noise of the Radar system.Further, speckle noise is a phenomenon where a received Radar signal includes “granular” noise. Basically, these granular dots are created by the sum of all information that is scattered back from within a “resolution cell”. All of these signals can add up constructively, destructively, or cancel each other out. Elaborate filters and methods have been developed, but all of them function better and with less effort when the signals have a better spectral purity, or in other words better phase noise. One of these methods, just as an example, is the “multiple look” method. When implementing this, each “look” happens from a slightly different point so that the backscatter also looks a bit different. These backscatters are then averaged and used for the final imaging. The downside of this is that the more “looks” are taken the more averaging happens and information is lost as with any averaging.As additional background for this invention, there are few phenomena that need to be laid out here: Doppler Effect: The Doppler Effect is the change in frequency or wavelength of a wave for an observer moving relative to its source. This is true for sound waves, electromagnetic waves, and any other periodic event. Most people know about the Doppler Effect from their own experience when they hear a car that is sounding like a siren approaching, passing by, and then receding. During the approach, the sound waves get “pushed” to a higher frequency, and thus the siren seems to have a higher pitch, and when the vehicle gains distance this pitch gets lower since the sound frequency is being “pushed” to a lower frequency.The physical and mathematical model of this phenomenon is described in the following formula:

- f = ( c + v r c + v s ) f 0

- Where f0 is the center frequency of the signal, c is the speed of light, vr is the velocity of the receiver relative to the sound/radiation source, vs is the velocity of the sound source relative to the receiver and f is the frequency shift that is being created.

After simplifying the equation, we will get: - Δ f = Δ v c f 0

- Where Δv is the relative velocity of the sound source to the receiver and Δf is the frequency shift created by the velocity difference. It can easily be seen that when the velocity is positive (the objects get closer to each other) the frequency shift will be up. When the relative velocity is 0, there will be no frequency shift at all, and when the relative velocity is negative (the objects gain a distance from one another) the frequency shift is down.In old-fashioned Radars, the Doppler effect gets a little more complicated since a Radar is sending out a signal and expects to a receive signal that is lower in power but at the same frequency when it hits an object. If this object is moving, then this received signal will be subject to the Doppler effect and in reality, the received signal will not be received at the same frequency as the frequency of the transmit signal. The challenge here is that these frequency errors can be very subtle and could be obscured by the phase noise of the system (as shown in FIG. 34). The obvious drawback is that vital information about the velocity of an object gets lost only because of phase noise (see figure below). The above is especially right when dealing with objects that move slower than airplanes and missiles, such as cars, bicycles, pedestrians, etc.Modulated Signals—Newer Radar systems use modulated signals that are broadly called FMCW (Frequency Modulated Continuous-wave), but they can come in all forms and shapes such as NLCW, PMCW, chirps, etc. (Nonlinear Continuous Wave and Phase Modulated Continuous Wave). The main reason for the use of modulated signals is that old-fashioned Radars need to transmit a lot of power to receive and echo back from a target while modulated signals and smart receive techniques can do that with much lower transmit power.Another big advantage of FMCW based Radar systems is that the distance of a target can be calculated based on Δf from the instantaneous carrier signal rather than travel time. However, herein also lies the problem—to be able to calculate and determine the characteristics of a target accurately a spectrally clean signal with ultra-low phase noise as low as technically possible provides many advantages.Usually modulated Radar signals are processed with the help of FFT utilizing signal processing windows and pulse compression algorithms. While these methods are good phase noise still remains one of the major contributors, if not to say the largest contributor to errors and inaccuracies.The spectral picture of a processed signal looks like the FIG. 35. As one can see the spectral picture contains also unwanted sidelobes. One major contributor to the sidelobes is the phase noise of the Radar system. Phase noise (or sometimes also called Phase Jitter or simply Jitter) responds to 20 log(integrated phase noise in rad). This spectral regrowth of side lobes can cause errors in the determination of the actual distance of a target and can obscure a small target that is close to a larger target. It can also cause errors in target velocity estimation.Another use of Radar that is sensitive to phase noise includes Synthetic Aperture Radar (SAR) of all kinds. These Radars are being used in countless applications ranging from space exploration through earth’s surface mapping, Ice pack measuring, forest coverage, various military applications to urban imaging and archaeological surveys. However, all the Radar applications have a common drawback of bearing phase noise that leads to depletion of the quality of the end result or failure in achieving the desired outcome. For example, whether we refer to Interferometric SAR (InSAR) or Polarimetric SAR (PolSAR) or a combination of these methods or any other type of SAR or Radar in general, all of them are suspect to phase noise effects regardless of the type of waveform/chirp used. Considering the shift in frequency and the low signal strength there is a probability that the received Radar signal will be buried under the phase noise skirt, and the slower the object this probability grows. Again, the determination of two close objects vs. one large one is a challenge here. SAR Radars create images of their surroundings and the accuracy of the images depends also on the phase noise of the signal. Some of these radars can also determine the electromagnetic characteristics of their target such as the dielectric constant, loss tangent, etc. The accuracy here again depends on the signal quality which is largely determined by the sidelobes created during the utilization of the FFT algorithm mentioned above which in turn stems from the phase noise of the system.Further, in FIG. 36, the sidelobes have been simplified to only a wide overlapping area. This is also very close to what happens in reality because of the way the signal processing algorithms work. As can be easily seen in the figure above, weaker return signals can get obscured in the sidelobes of a stronger signal, and the overall available and crucial SNR decreases because every return signal carries sidelobes with it.Thus, the Radar systems are challenged when dealing with slow-moving objects such as cars, bicycles, and pedestrians. Furthermore, these traditional Radar systems, whether using a modulated or non-modulated signal, have difficulties identifying objects that are very close to each other since one of them will be obscured by the phase noise of the system.Based on the aforementioned, there is a need of a radar system that can effectively be utilized for autonomous cars by canceling or reducing the phase noise of the received Radar signal. For example, the system should be capable of determining surroundings (such as by detecting objects therein) with cost-effectiveness and without significant internal phase noise affecting performance.The system should be able to add as less as possible phase noise to the received Radar signal. Further, the system should be able of detecting and analyzing the received signal without being affected by the internal receiver phase noise therefrom. Furthermore, the system should be capable of implementing artificial intelligence to make smart decisions based on the determined surrounding information. Additionally, the system should be capable to overcome the shortcomings of the existing systems and technologies.

SUMMARY

-

Some of the Benefits of the Invention:

-

The present invention emphasizes that by incorporating the ultra-low phase noise synthesizer in the existing Radar system, the performance of the Radar system will be improved substantially in terms of target detection accuracy and resolution and because of this it can become the dominant sensor for the handling of autonomous cars. Herein, the Synthesizer drastically reduces the phase noise of Radar signals so that such Radar sensor will be able to replace current sensor systems at very low cost and with reliability at all lighting and adverse weather conditions.

- A system that utilizes an ultra-low phase noise synthesizer will be able to provide data to a processor that can determine the electromagnetic characteristics of an object with sufficient accuracy so that the system is able to determine if the object is a living object such as a human being or an animal or if it is inanimate. It will also be able to provide data that is accurate enough to differentiate between the material objects are made of such as differentiating between wood and stone for example.

- [0061]Further, as a derivative of the capability to determine the material, an object is made of combined with the electromagnetic waves capability to penetrate through many materials an object detection system utilizing an ultra-low phase noise synthesizer will provide data that will enable a processing unit (such as a specialized processor of the object detection system) to find objects that are visually obscured by another object and determine the material of the obscured and obscuring object. Thus the system may be able to find a human behind a billboard or wildlife behind a bush or determine that there is only 2 bushes one behind the other.

- According to an embodiment of the present disclosure, an object detection system for autonomous vehicles. The object detection system may include a radar unit coupled to at least one ultra-low phase noise frequency synthesizer, configured for detecting the presence of one or more objects in one or more directions, the radar unit comprising: a transmitter for transmitting at least one radio signal to the one or more objects; and a receiver for receiving the at least one radio signal returned from the one or more objects. Further, the object detection system may include the at least one ultra-low phase noise frequency synthesizer that may be utilized in conjunction with the radar unit, for refining both the transmitted and the received signals, and thus determining the phase noise and maintaining the quality of the transmitted and the received radio signals, wherein the at least one ultra-low phase noise frequency synthesizer comprises: (i) at least one clocking device configured to generate at least one first clock signal of at least one first clock frequency; (ii) at least one sampling Phase Locked Loop (PLL), wherein the at least one sampling PLL comprises: (a) at least one sampling phase detector configured to receive the at least one first clock signal and a single reference frequency to generate at least one first analog control voltage; and (b) at least one reference Voltage Controlled Oscillator (VCO) configured to receive the at least one analog control voltage to generate the single reference frequency; and (c) a Digital Phase/Frequency detector configured to receive the at least one first clock signal and a single reference frequency to generate at least a second analog control voltage; and (d) a two-way DC switch in communication with the Digital Phase/Frequency detector and the sampling phase detector; (iii) at least one first fixed frequency divider configured to receive the at least one reference frequency and to divide the at least one reference frequency by a first predefined factor to generate at least one clock signal for at least one high frequency low phase noise Direct Digital Synthesizer (DDS) clock signal; (iv) at least one high frequency low phase noise DDS configured to receive the at least one DDS clock signal and to generate at least one second clock signal of at least one second clock frequency; and (v) at least one main Phase Locked Loop (PLL).

- Hereinabove, the main PLL may include: (a) at least one high frequency Digital Phase/Frequency detector configured to receive and compare the at least one second clock frequency and at least one feedback frequency to generate at least one second analog control voltage and at least one digital control voltage; (b) at least one main VCO configured to receive the at least one first analog control voltage or the at least one second analog control voltage and generate at least one output signal of at least one output frequency, wherein the at least one digital control voltage controls which of the at least one first analog control voltage or the at least one second analog control voltage is received by the at least one main VCO; (c) at least one down convert mixer configured to mix the at least one output frequency and the reference frequency to generate at least one intermediate frequency; and (d) at least one second fixed frequency divider configured to receive and divide the at least one intermediate frequency by a second predefined factor to generate the at least one feedback frequency.

- Herein, the radar unit determines the distance and direction of each of one or more objects. Further, the radar unit determines one or more characteristics, of two close objects irrespective of the size of the one or more objects. Again further, the radar unit differentiates between two or more types of objects when one object is visually obscuring another object. Additionally, the radar unit utilizes a modulated or non-modulated radio signal, to determine the presence of a slow-moving target despite the very small Doppler frequency shift. Also, the radar unit utilizes a modulated or non-modulated radio signal, to determine the presence of a close-range target despite the very short signal travel time.

- The object detection system may further include at least one additional sensor system, available on the autonomous vehicle, in conjunction with the radar unit. Further, at least one ultra-low phase noise frequency synthesizer further comprises at least one fixed frequency multiplier configured to receive and multiply the at least one output signal generated by at least one main PLL by a predefined factor to generate at least one final output signal of at least one final output frequency. At least one ultra-low phase noise frequency synthesizer is implemented on the same electronic circuitry or on a separate electronic circuitry. Further, the ultra-low phase noise frequency synthesizer may be used to generate the up or down-converting signal of the radar unit.

- Further, according to another embodiment of the present disclosure, a method for autonomous vehicles is disclosed. The method may include (but is not limited to): detecting the presence of one or more objects in one or more directions by a radar unit. Herein, the radar unit comprising: a transmitter for transmitting at least one radio signal to one or more objects; and a receiver for receiving at least one radio signal returned from one or more objects. Further, the method may include performing, at least one ultra-low phase noise frequency synthesizer for refining the transmitted and the received signals, and thereby determining a phase noise and maintaining the quality of the transmitted and the received radio signals.

- Hereinabove, the at least one ultra-low phase noise frequency synthesizer comprises: (i) at least one clocking device configured to generate at least one first clock signal of at least one first clock frequency; (ii) at least one sampling Phase Locked Loop (PLL), wherein the at least one sampling PLL comprises: (a) at least one sampling phase detector configured to receive the at least one first clock signal and a single reference frequency to generate at least one first analog control voltage; and (b) at least one reference Voltage Controlled Oscillator (VCO) configured to receive the at least one analog control voltage to generate the single reference frequency; and (c) a Digital Phase/Frequency detector configured to receive the at least one first clock signal and a single reference frequency to generate at least a second analog control voltage; and (d) a two-way DC switch in communication with the Digital Phase/Frequency detector and the sampling phase detector; (iii) at least one first fixed frequency divider configured to receive the at least one reference frequency and to divide the at least one reference frequency by a first predefined factor to generate at least one clock signal for at least one high frequency low phase noise Direct Digital Synthesizer (DDS) clock signal; (iv) at least one high frequency low phase noise DDS configured to receive the at least one DDS clock signal and to generate at least one second clock signal of at least one second clock frequency; and (v) at least one main Phase Locked Loop (PLL), wherein the at least one main PLL comprises: (a) at least one high frequency Digital Phase/Frequency detector configured to receive and compare the at least one second clock frequency and at least one feedback frequency to generate at least one second analog control voltage and at least one digital control voltage; (b) at least one main VCO configured to receive the at least one first analog control voltage or the at least one second analog control voltage and generate at least one output signal of at least one output frequency, wherein the at least one digital control voltage controls which of the at least one first analog control voltage or the at least one second analog control voltage is received by the at least one main VCO; (c) at least one down convert mixer configured to mix the at least one output frequency and the reference frequency to generate at least one intermediate frequency; and (d) at least one second fixed frequency divider configured to receive and divide the at least one intermediate frequency by a second predefined factor to generate the at least one feedback frequency.

- Herein, the method may further include various steps such as receiving and multiplying, by ultra-low phase noise frequency synthesizer, the at least one output signal by a predefined factor to generate at least one final output signal of at least one final output frequency. Further, the method may generate the up converting or down converting signal of the radar unit. Furthermore, the method may determine the presence of a slow-moving target despite the very small Doppler frequency shift. Again further, the method may include determining the presence of a close-range target despite the very short signal travel time. Additionally, the method may determine the distance and direction of each of the one or more objects. Furthermore, the method may determine the type of material an object is made up of. Also, the method may include a step of activating one or more additional sensors for operation thereof in conjunction with the radar unit. The method may determine the characteristics of two close objects irrespective of the size of the objects. Further, the method may differentiate between two or more types of objects when one object is visually obscuring another object.

- According to an embodiment of the present disclosure, a system is a detection system that comprises a radar unit, communicably coupled to at least one ultra-low phase noise frequency synthesizer, is provided. The radar unit is configured for detecting the presence of one or more objects in one or more directions. Herein, the radar unit comprising: a transmitter for transmitting at least one radio signal; and a receiver for receiving at least one radio signal returned from one or more objects/targets. Further, the detection system may include at least one ultra-low phase noise frequency synthesizer that may be configured for refining the returning at least one radio signal to reduce phase noise therefrom.

- Herein, the ultra-low phase noise frequency synthesizer is a critical part of a System, regardless of how it is implemented. The ultra-low phase noise frequency synthesizer comprises one main PLL (Phase Lock Loop) and one reference sampling PLL. The main PLL comprises one high-frequency DDS (Direct Digital Synthesizer), one Digital Phase Frequency Detector, one main VCO (Voltage Controlled Oscillator), one internal frequency divider, one output frequency divider or multiplier, and one down convert mixer. The reference sampling PLL comprises one reference clock, one sampling phase detector, and one reference VCO. This embodiment provides a vast and critical improvement in the overall system output phase noise. The synthesizer design is based on the following technical approaches—a) using of dual-loop approach to reduce frequency multiplication number, b) using of sampling PLL as the reference PLL to make its noise contribution negligible, c) using of DDS to provide high-frequency input to the main PLL, and d) using of high-frequency Digital Phase Frequency Detector in the main PLL.

- According to an embodiment of the present disclosure, a detection system comprising a radar unit and an ultra-low phase noise frequency synthesizer is provided. The system is made up of a System on Chip (SoC) module. The radar unit is configured for detecting the presence of one or more objects in one or more directions. The radar unit comprising: a transmitter for transmitting at least one radio signal; and a receiver for receiving at least one radio signal returned from one or more objects/targets. In an embodiment, the Transmit and receive signal frequencies might be equal. For example, if there is no Doppler effect, the signal frequencies may be equal. In an embodiment, the transmit and receive frequencies might also be different, for example in cases where the Doppler Effect is present. The ultra-low phase noise frequency synthesizer comprises one main PLL (Phase Lock Loop) and one reference sampling PLL. The main PLL further comprises one Fractional-N Synthesizer chip, one primary VCO (Voltage Controlled Oscillator) and one down convert mixer. The Fractional-N Synthesizer chip includes one Digital Phase Detector and one software controllable variable frequency divider. The reference sampling PLL comprises one sampling PLL and one reference VCO. This embodiment provides multiple improvements in system output which are based on the following technical approaches—a) using of dual-loop approach to reduce frequency multiplication number, b) using of sampling PLL to make its noise contribution negligible, and c) using of a high-frequency Fractional-N Synthesizer chip in the main PLL.

- In an additional embodiment of the present disclosure, a vehicle having a detection system is disclosed. The detection system may be implemented for detecting information corresponding to one or more objects, the detection unit comprising: a radar unit for transmitting radio signals and further for receiving the returned radio signal(s) from one or more objects/targets; and at least one ultra-low phase noise frequency synthesizer for refining the returned signals to reduce the effect of phase noise in the returned radio signals. Further, the detection unit comprises a processor for processing the refined signals to determine one or more characteristics corresponding to the one or more objects, the processor determining one or more actions based on one or more factors and the one or more characteristics corresponding to the one or more objects. The processor further may determine one or more actions being adoptable by the vehicle based on one or more characteristics that may originate from the radar system and/or in conjunction with information originated from another sensor. The vehicle further includes one or more components communicably coupled to the processor for performing the determined one or more actions.

- The detection system may further include a memory for storing information and characteristics corresponding to one or more objects, and actions performed by the vehicle.

- Hereinabove, at least one ultra-low phase noise frequency synthesizer may be implemented in any manner as described further in the detailed description of this disclosure. Further, the radar unit comprises at least one of: traditional single antenna radar, dual or multi-antenna radar, synthetic aperture radar, and one or more other radars. Further, in an embodiment, the processor may determine the phase shift in frequencies of the transmitted radio signals and the returned radio signals. Such phase shift (difference in phase noise frequency) may further be analyzed in light of a frequency of the refined radio signal to self-evaluate the overall performance of the detection system (or specific performance of the ultra-low phase noise frequency synthesizer).

- The preceding is a simplified summary to provide an understanding of some aspects of embodiments of the present disclosure. This summary is neither an extensive nor exhaustive overview of the present disclosure and its various embodiments. The summary presents selected concepts of the embodiments of the present disclosure in a simplified form as an introduction to the more detailed description presented below. As will be appreciated, other embodiments of the present disclosure are possible utilizing, alone or in combination, one or more of the features set forth above or described in detail below.